One of the most common issues I look at from day to day is some variation of the question. “Why did it take a long time for my AG/Database to failover?”. There are many different meanings for this innocuously simple looking statement, for example was it that the failover time was long or was it a long time bringing the database online, or was it that it took a long time because a failover wasn’t possible, and what *exactly* is a long time? Are we talking a long time means 10 seconds, 1 minute, 5 minutes, 30 minutes? To each different business and their needs, “long” dramatically fluctuates. I’d like to go through at a high level, some of the most common reasons that I troubleshoot and if they might apply to your environment. FYI, if you tell me 1 second is a long time then I’m going to point you toward different architectures with multiple layers of caches and front-end servers/services which isn’t going to be cheap, but that’s what you want so you’re _willing_ to pay for it, right? Yeah, I thought not.

Large Memory + Many Data Files

First, let’s understand what “Large Memory” means. It’s not generically a number, but if I had to lick a finger and put it in the air then it’d come in somewhere around 512GB on the lowest of ends. There are various other factors here such as the number of numa nodes and if whomever ordered the server and/or installed the memory did so correctly (some enterprise motherboards will not boot if you install the memory in a poor configuration, most however will let you shoot yourself in the foot). Assuming that’s not your issue, as I discussed previously databases in an availability group will go through undo of redo fairly often if not every time.

Much as I discussed in the post via the last paragraph and won’t reiterate here, if it is required to scan a large buffer pool (the largest memory consumer in SQL Server) which might take 6+ seconds on very large memory servers and there is a scan per database file, then many database files + slow scan speeds = a long time. There are a few different ways that this can be verified but a common way is to use xperf or wpr to capture the cpu events and look at cpu usage sampled data while using public symbols. You’ll see the bulk of the cpu time spent in the buffer iterator for that callstack.

Antivirus/HIPS/Auditing/Backup Software

Yeah, this one covers a myriad of software level items for good reason – they all cause issues in various ways, but the outcome is the same which is taking longer to bring the database up as a primary (or secondary!).

Antivirus/HIPS/Auditing: These software suites typically contain at least two different segmented parts. The first part is a kernel level module or set of modules, which may span multiple different technology stacks, such as disk, network, and general kernel. The second part generally consists of a user mode service (or set of services) that interact with the kernel level modules, whether it be configuration, reporting, or other functionality that is local to that software. Do not let your co-workers lie to you, even if the software is “disabled” or “in monitoring mode” and the kernel modules are loaded, it is still in the critical path of whatever it is doing which means it’s still actively intercepting and touching the items on the system. If someone tells you that’s not the case, then you’ll want to find a better co-worker as that one clearly can’t be trusted.

Backup Software: This tends to sit at one or more of three different layers. The first being the actual operating system environment as a kernel module which is then responsible for tracking or otherwise working with the changed files or blocks (if block level). The second being the host in a virtualized environment, where the host is doing this instead of in each guest. The last is the storage itself, where it might have multiple distinct snapshots or copies that use a block level copy on write or other means to keep data and snapshots in sync.

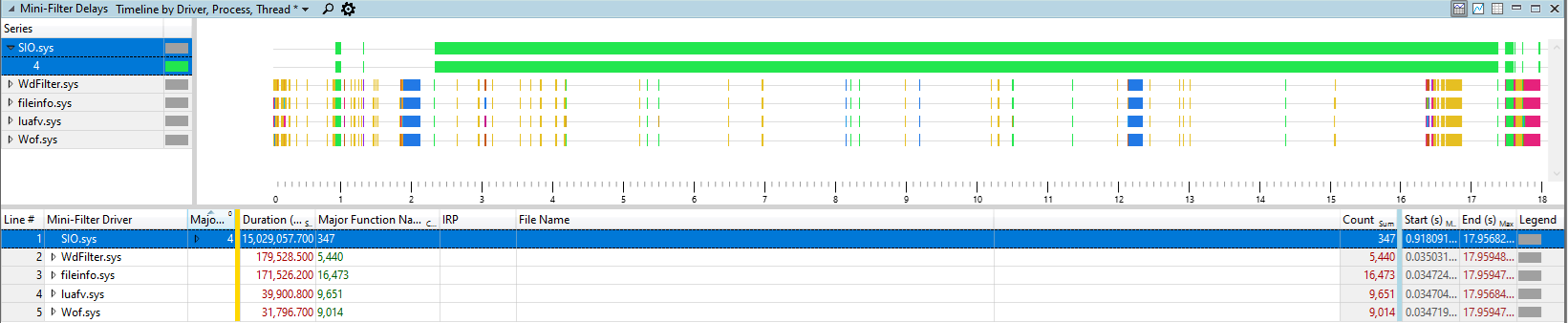

Figuring out if one of these are to blame can be rough but is generally straight forward, the catch here being that anything happening outside of the operating system environment (such as anything outside of the OS in the guest) you won’t be able to generally troubleshoot from inside the guest. The go to here is again xperf or wpr, specifically obtaining the cpu and minifilter events. To give you an idea what this looks like, I wrote a small minifilter driver that acts like an antivirus or antimalware software module. The below screenshot is representative of issues you’ll find when these types of programs are not behaving correctly.

The above screenshot is from an 18 second capture, sorted by driver and major IRP function. In this case this driver (that I wrote to show bad behavior) is only causing issues with writes but not reads. There are a multitude of functions that an IRP can have but this is one of the main ones. Note that of the 18 seconds, SIO.sys spends slightly over 15 seconds in the critical path.

If a perfmon (logman) capture and an xperf/wpr capture do not show any significant delays, it could be happening at a level below the operating system in which case a storport trace would be needed, or it could be starvation of the cpu for the application to respond to the completed IO requests. SQL Server, as an example, has other completion routines that will run after Windows has marked the IO completed. These are only executed after a context switch on the scheduler that submitted the IO to Windows, and thus schedulers starved for cpu via other means may show significant slowness in IO.

Patching And Upgrades

When secondary replicas (or a primary for that matter) is patched, the databases will need to be resynchronized with the new and potentially changed metadata – such as changes to on disk formats or creation of internal items that the upgrade or CU requires (Service Packs are dead, we’re not going there). Because of this requirement, the databases are going to be restarted to sync up, and this is fairly telling as checking the SQL Server errorlog it would be trivial to find a patch was installed and then a failover occurred at some later point. There’s no high-tech gizmo needed here, just looking at the long… which I’ve found to be a non-trivial request from many of you. For shame, you call yourself a DBA.

Long Or Huge Transactions

Remember the phases of recovery? One of those is redo and one of those is undo (and if a secondary replica one of those might be undo of redo), so if you’re willy-nilly rebuilding your indexes every day because you hate your infrastructure and you don’t know any better, then this one is for you. The database won’t be able to come up as a primary database replica until the recovery phases are complete. If there is a long running open transaction, this could take a while. If there is a huge open transaction, then settle in and get comfortable. Again, there isn’t anything high-tech needed, just a look into the errorlog and notice that the database is going through redo or undo – still. It’ll print out an estimated amount of time remaining. Please, do not do what everyone consistently either does (or asks, you know, like for a friend and stuff) and restart the instance. If you do this, it’ll have to start over and now you’ve wasted however long it was doing work.

There are two new features, one in SQL Server 2019 and one in 2017 (this should be, if nothing else, a reason to get off 2008/2012/2014/2016 and go to 2019 [latest at the time of this writing]). The first is Accelerated Database Recovery or Constant Time Recovery (whichever you want to call it these days) and the second, for you luddites that are 100% hell bent on doing things the absolutely work possible way, is resumable index rebuilds.

Undersized Server

One would like to think that I didn’t need to cover this one, and I had thought about not writing it… sadly, there are too many of these that come up and here we are, such a sad state of affairs. This one is for that admin who really hates giving servers cpu or memory, or for the DBA that thinks, “I can absolutely put 800 databases in a single AG on an 8 core server!”. Generally, when this is the case, there are other tell-tale signs, such as partial failovers where some databases just don’t seem to recover, ever. The evidence for this can easily be found in two places, the first being the errorlog which will generally complain about not being able to spawn new threads. The second is the sp_server_diagnostsics extended events data that is collected by default (if not turned off, which is manually required, tsk tsk) where the output of the resource data returned can be checked for the max server workers against the current workers. That means, yes, I totally catch you when you say, “We didn’t run out of worker threads” and then I see the instance, using that same 8-core server, is set to 5,000 worker threads – 100% red handed as that value also requires a manual change.

The solution here is to either move databases onto other servers and spread the load or get a larger server. I’m in favor of spreading the load around to multiple servers, personally.

1 thought on “Availability Group Long Failover Times”

Comments are closed.