I’ve been playing around with some different NVMe drives lately, looking at different performance characteristics and when I saw the output of some initial testing it made me want to write a quick post.

Today, cloud is still the hotness (well, if you get past everyone saying AI like they said ML a few years ago… I wonder what the next thing will be?) though with the costs continuing to rise for modest hardware or hitting walls with fully managed options, some places are saving large sums of money by bringing items back in house while others are still looking to implement their own hybrid strategy, and some are expanding their own internal cloud setups and offerings. It really doesn’t matter where you’re running your stuff so long as it fits your needs and is within budget, though real performance happens with your own hardware and configurations.

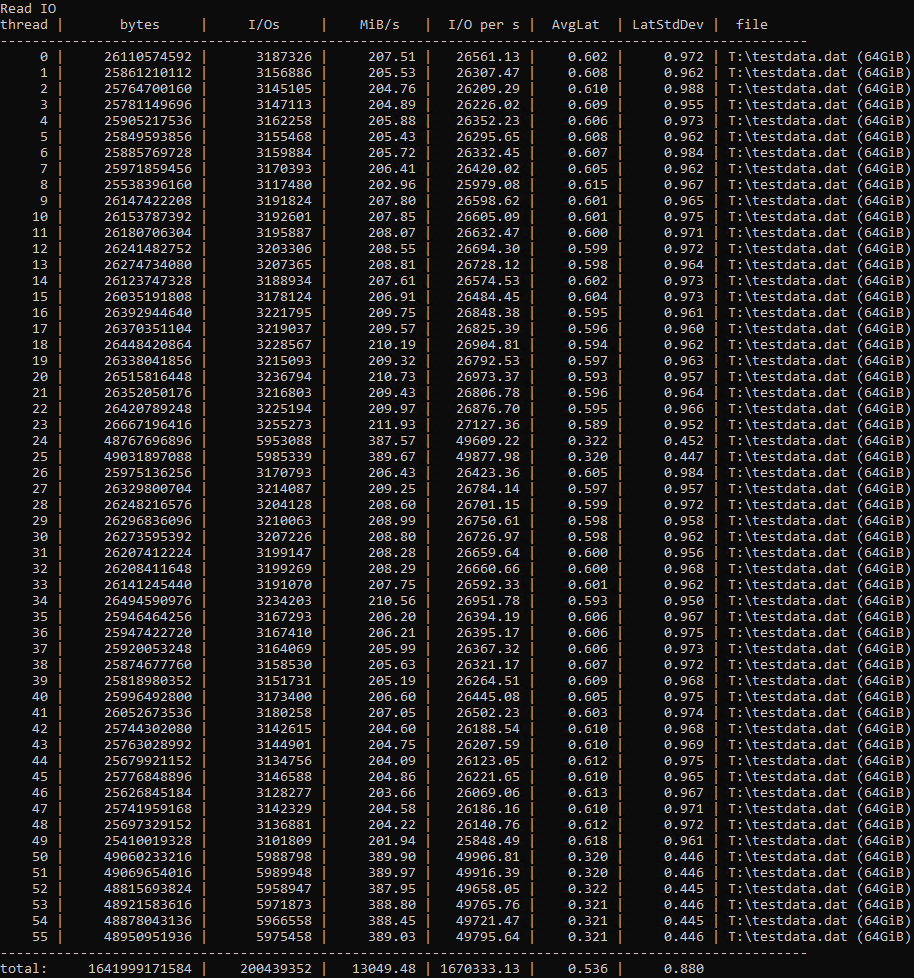

With this in mind, looking at commodity hardware to run workloads isn’t anything new. It’s been done for decades at this point, but I’d argue with the latest trend of hardware that’s been released the last few years it does bring enterprise level performance to most without the need for specialty hardware with price tags in the millions. Looking into some of the latest NVMe consumer drives and using very inexpensive hardware I was able to push (on this 8TB volume) 13 GB/s using 8k reads for a total of 1.67 million IOPs averaging 500 microseconds per IOP. You might ask the price of this performance? $665 USD one time price, including the add-in card to fit them all in a PCIe 4.0×16 slot. That’s the same price per month as a P50 in Azure which is 4 TB with 7,500 IOPs and 250 MB/sec bandwidth. Now, this isn’t apples to apples comparison as cloud based disk typically including a redundant copy or copies and can typically be moved between virtual machines, etc., so I’m not stating there aren’t other tangible items to discuss. However, it does show just how capable one generation behind the latest hardware has become, increasingly leading to cost savings and performance gains just by running things yourself.

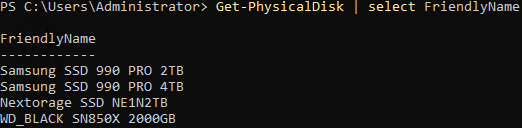

This specific setup is a simple storage space with a 256k interleave using 4 columns. There are 4 total drives connected to a single PCIe 4.0 NVMe expansion card. The drives included are as follows, Samsung 990 Pro 4TB, Samsung 990 Pro 2TB, WD Black SN850X 2TB, Nextorage 2TB. These aren’t “enterprise” SSDs but considering the Samsung 990 Pro 2TB has a rated lifespan of 1,200 Terabytes (that’s 1.2 Petabytes) Written (TBW) factored in with the cost of replacement which was $130 USD at the time of writing means that it’s cheap and effective. Ideally you wouldn’t mix and match the drives but this is what I was testing, unless someone wants to send me 3 more of these drives 🙂

What’s the use case for this? I work with high availability a whole bunch and one of the things that you can do with SQL Server Availability Groups, for example, is to have dedicated individual machines to offer both disaster recovery and high availability. Loading up commodity machines with terabytes of local NVMe and some decent RAM means that losing one drive isn’t a big deal, you have other replicas to take care of it. Replace the drive, seed the data again which could completely consume 40Gb networking (5 GB/sec) means even for multiple terabyte sized databases this could take minutes (assuming SQL Server is configured properly for the setup). Well, if you need that level of performance anyway, which is why configuring as needed really helps, as does the overall cost savings.

Care to share what PCIe NVMe card you used?

Apologies, I used the “EZDIY-FAB Quad M.2 PCIe 4.0/3.0 X16 Expansion Card with Heatsink” which was $42 at the time. There are various other cards available, this one DOES require the motherboard support PCIe Bifurcation for the slot. This was connected to a PCIe slot dedicated to CPU 1 while the threads were affinized to CPU 0 which makes this the worse case as it requires going over QPI (intel).